Hi, my name is yue, from the VRoid team.

This article was created based on a talk given at "PIXIV DEV MEETUP 2024" held on September 20th, 2024.

Introduction

In February 2024, We published an article about how we adopted Rust as a server side language. This article will provide a comprehensive overview of our progress since then.

System architecture

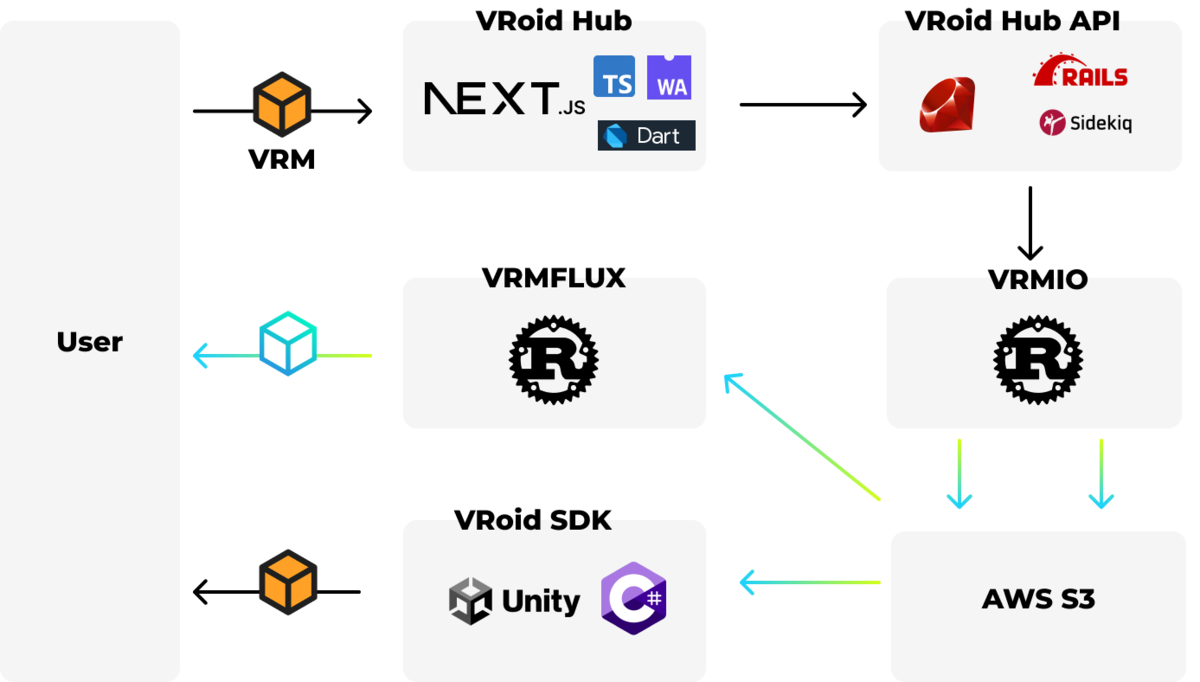

Here's a picture of our system’s overview before the migration.

This may seem a bit complex at first glance, but I will explain it one component at a time.

This is VRoid Hub's frontend application. It was built with Next.js and written in TypeScript.

There was some code compiled from Dart used for client-side validation, and we used WebAssembly to handle 3D models’ textures.

VRoid Hub's API Server is a Ruby on Rails application. It has a job queue for optimizing 3D models in the background.

We also have a library called vrm-ruby used internally which is written in Ruby. This makes it easy to use with Rails, but there are also some issues. For example, the model processing logic can easily get coupled to the API code.

Also, because it does not have many features, it cannot perform advanced transformation.

This is another component called VRMIO on the server side. It is a CLI application for optimizing VRM files.

Since it's written in C-sharp and powered by Unity Engine, it can perform advanced transformation, but it has an extremely complex build setup and runtime.

The final component on the server side is called VRMFLUX. It is a web server for downloading 3D models on demand.

It only has a small code base, so it is relatively easy to maintain, but just like vrm-ruby, it cannot perform advanced transformation logic. Also, because it is a user-facing service, its performance and security issues are critical.

To summarize, the server-side components are: vrm-ruby, which provides basic VRM support; VRMIO, for optimizing VRM; and VRMFLUX, the on demand download server.

As you can see, vrm-ruby, VRMIO, and VRMFLUX are all doing some kind of 3D model processing, but they are all written in different languages using different runtimes.

In a worst case scenario, a developer has to investigate each system to get something fixed.

Also, if there are any performance or security issues, each has to be fixed separately, one at a time.

The question became: how do we improve what we have?

Our journey

Next, I will talk about our journey to improve our system architecture.

First, we decided to try using Rust as a language to unify and migrate our processing pipeline.

There were many reasons to choose Rust.

First, Rust performs very well. Secondly, thanks to the strong type system and the borrow checker, Rust gave us more control and improved the overall correctness of the code base.

Also, Rust has a wide range of build targets and a good variety of libraries with excellent quality. I will talk about libraries a bit later in this article.

We also considered how well it matched our team members’ skills before we made the decision.

The first step of migrating the system was migrating VRMFLUX. You can find more details of the process here.

After the migration of VRMFLUX, we saw that the average response times were four times faster and only consumed a quarter of original CPU usage.

This looked good to us and gave us the confidence to continue on with this decision.

After the first step was done, the second step was converting VRMIO and vrm-ruby to Rust.

It is interesting to note that another developer on our team gave a talk on a similar topic three years ago. The title was The Optimization of 3D Models on VRoid Hub.

As you can see on this slide from the previous talk, the setup and requirements for running a portable unity application on the server side was extremely complex. We were even using GPU emulators to make the application run.

There were so many dependencies we needed to keep track of in addition to actually building the application. By migrating the application to Rust, the build step could be as easy as just running the cargo build.

The main challenge of converting a Unity application to Rust was preparing for advanced processing. For example, we had to perform texture atlas in a pure Rust context and build an importer and exporter for glTF or VRM files from scratch.

Modifying glTF files with Rust

Although glTF looks like JSON, it's actually a property graph.

If we try to visualize the graph, it will look something like this.

Texture atlas is a method for improving rendering costs.

Given the fact that glTF is a property graph, we can imagine a model has some definitions like this.

This model has four different meshes, four different materials, textures, and images.

With texture atlasing, we have to add and remove edges from existing materials and texture node graphs to complete the change.

So if we look at what is actually happening in the code, we are essentially doing what is shown in the picture above.

We first find the edge with the relationship we want to modify, then we just call the remove_edge and add_edge methods to change the model.

After testing, we converted the VRMIO and the vrm-ruby to Rust.

By doing this, we were able to migrate all the server-side network requests to use zstandard as well.

As a result, we were able to build a stable GLTF/VRM importer and exporter. The average speed of model optimization is two times faster with much less memory consumption.

One important outcome of the migration is that the application is much easier to build, test, and maintain.

Looking at an overview of the system’s architecture at this point, you can see that we now have a shared Rust engine.

Here are some future possibilities for the engine.

We can compile the engine into WebAssembly or WASI targets for running with different runtimes. We could also compile the code as bindings if we decide to use the engine in Node or Ruby one day.

Libraries & Testing

We have utilized various crates throughout the migration process. For instance, we are using gltf_rs for glTF handling—the same library employed by the Bevy engine, a pure Rust game engine.

We are also using petgraph for graph processing. It is the same core component behind SWC, the famous JavaScript build tool.

Additionally, we are leveraging libraries like euclid for mathematical implementations. These libraries originate from the Servo community, which is developing a cutting-edge browser engine.

In essence, our system is powered by technologies derived from game and browser engines.

Here are some details about how we did the test.

We are using insta for snapshot testing, playwright for E2E testing WebGL contents.

We are also using Sentry to check for errors. Sentry actually has an official Rust crate, and that helps us a lot.

In addition, we are using Datadog for performance monitoring to see if something goes wrong or to check performance metrics.

We even built a dedicated real-time testing tool.

OSS contributions

We contribute intensively back to the ecosystem during the process. For example, we made contributions back to Three.js, GLTF-Transform, and many other repositories.

We also maintain OSS projects ourselves. For example, we have been maintaining three-vrm for several years.

During the migration process, we open sourced a new library called vrm-utils-rs, which contains the data structures for the VRM format.

We are also a part of the VRM consortium, so we are deeply involved in the process of discussing and designing the 3D model file format itself.

Summary

At VRoid, we will continue to focus on improving existing systems and user experiences.

We have many more plans to enhance our service this year.

Please stay tuned.